Of-late, most of my discussions with business leaders have invariably included a segue into Generative AI. While most leaders and business teams want to do something using Generative AI, very few have an idea of exactly what they can use it for. What is more, Generative AI feels like magic to most business teams and even the technology leaders don’t have an intuitive understanding of it. This leads to a fear of going down the rabbit-hole with no clear direction. While many enterprises have taken a first stab at GenAI, most have backed out after the first setback (which is inevitable in GenAI implementations), siting myths and misnomers prevalent in the market.

In this article, which is the first of a series where I aim to demystify Generative AI for the dummies, I will be describing the underlying concepts of Generative AI through simple examples and analogies.

Disclaimer: If you are looking for the underlying mathematics and technology details behind Generative AI, this series is not for you. Also, I may oversimplify certain concepts for ease of understanding.

What is Generative AI?

As the name suggests, Generative AI consists of 2 components.

Generative indicates that it generates or creates something (e.g. new content, images etc.).

AI or Artificial Intelligence is the ability to solve problems that are dynamic in nature (e.g. find the number of people in a photograph) as opposed to a static problem (e.g. what is 2+1).

A combination of these two has given rise to an AI that is versatile and addresses an extremely broad range of problems, as opposed to the traditional AI that mostly answered specific questions for the problem it was built to solve (e.g. forecast the profit for the next quarter based on past data).

How does Generative AI work?

The fundamental principle behind working of Generative AI is as simple as “putting one foot in front of the other to get anywhere”. The destinations are varied, like “a text”, “an image”, “a video”, “a 3D- model” etc. However, the underlying principle is the same.

Let me explain this for the text based destination, which is achieved using something called a Large Language Model or LLM. Do not get daunted by the term. You can think of a LLM as a black box that knows what comes next for any combination of words, based on how they have been used in the past. Just like we teach a child that the next thing after “My name is …” is their name, so has the LLM been taught what comes next using vast amount of books and articles available on the internet (this is called training data). So basically if you give an input like “The big brown fox jumped over the lazy” and ask what comes next, it will output “dog”.

All of the intelligence that you perceive in a text based Generative AI application like ChatGPT is essentially a response pieced together by guessing the next word (or phrase) based on the training data the LLM learned from.

Other destinations (or modes) for a Generative AI system can be Pictures, Videos, 3D Models etc. I will be describing how those work in subsequent articles.

Is ChatGPT a LLM?

No. A LLM is a piece of code which interacts with the data it has learned to provide (guess) responses to the task at hand. It is like the manuscript of a bestseller novel that only the author has. He has it but you as a reader have no access to it. Then the author gives the manuscript to a publisher who prints it into a book that you can buy. That book of paper and ink gives you access to the author’s novel.

ChatGPT is the book equivalent that provides to access to the LLM that sits within it.

Therefore, ChatGPT is not the LLM but the application or interface through which you can interact with the underlying LLMs — GPT4, GPT4o, GPT4o-mini. The same way the DeepSeek and Gemini applications are the interfaces exposing the underlying Gemini and DeepSeek-R1 LLMs respectively. These applications are also called Chatbots.

Conclusion

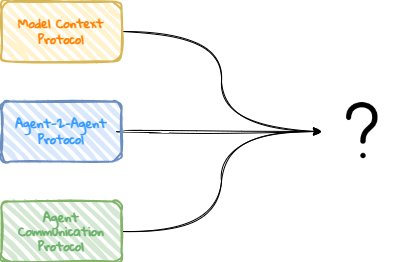

When an enterprise decides to use Generative AI, they are often faced with questions like “Should we train our own LLM?”, “Why should we Finetune our LLM?” and “What the hell is RAG (other than a tattered piece of cloth 😄)?”. These are some of the questions I will be addressing in my next article.

Request

Your feedback is a gift. As I put in multiple manhours to write these articles (and I promise that this is not generated or edited using GenAI 😄), I would highly appreciate if you could share your feedback on if you find this helpful.

Leave a comment